Kubernetes clusters have revolutionized the way organizations manage containerized applications, providing a powerful framework for automating deployment, scaling, and operations. As businesses increasingly adopt cloud-native architectures, understanding Kubernetes becomes essential for developers and IT professionals alike.

With its robust orchestration capabilities, Kubernetes allows teams to efficiently manage resources and ensure high availability, making it a cornerstone of modern DevOps practices. Whether it’s deploying microservices or optimizing resource utilization, Kubernetes clusters offer the flexibility and resilience needed in today’s fast-paced digital landscape.

Exploring the intricacies of Kubernetes not only empowers teams to harness its full potential but also positions them for success in an ever-evolving technological environment.

Table of Contents

ToggleOverview of Kubernetes Clusters

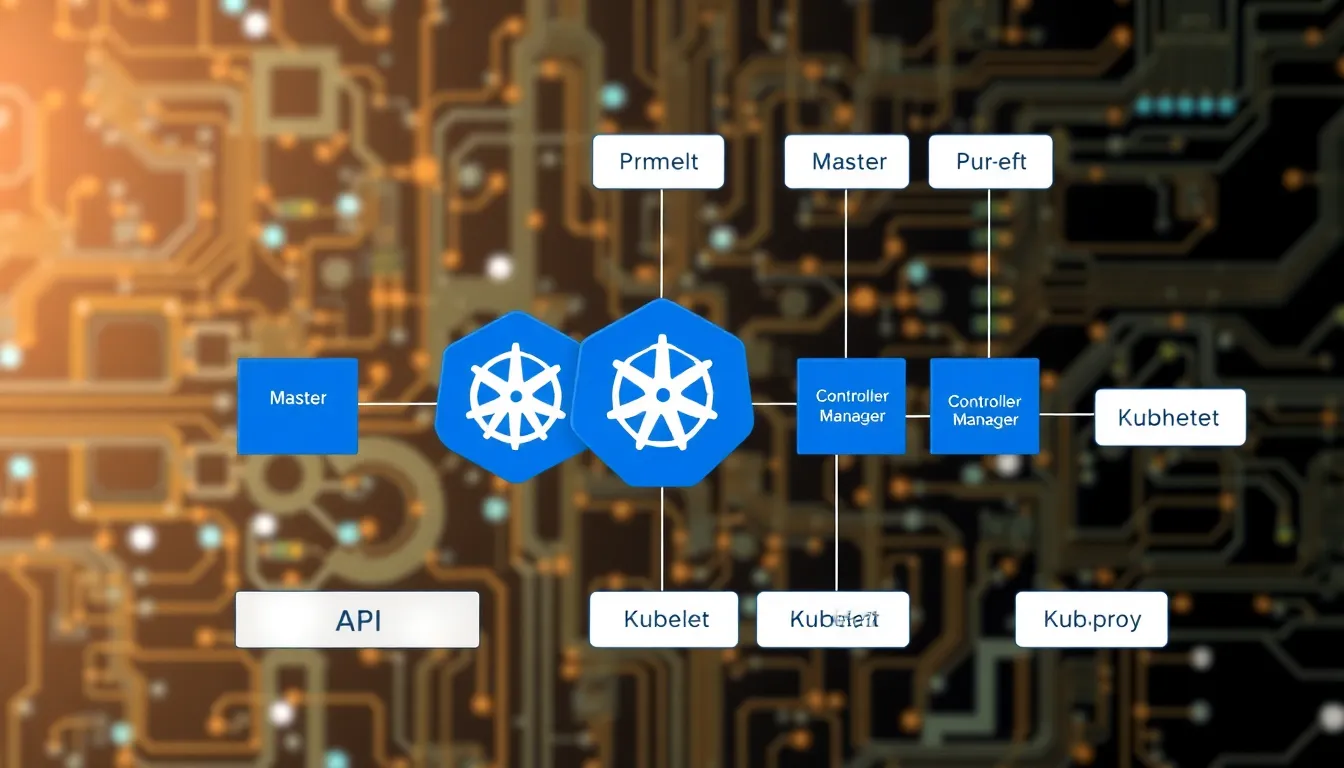

Kubernetes clusters consist of a set of physical or virtual machines that run containerized applications. Each cluster includes at least one master node that manages the cluster’s state and multiple worker nodes that host the application workloads.

Components of Kubernetes Clusters

- Master Node: The master node controls the cluster. It schedules workloads and maintains the desired state of the applications.

- Worker Nodes: Worker nodes execute the containerized applications. Each node includes a container runtime, such as Docker, to run the containers.

- ETCD: ETCD acts as a key-value store for storing all cluster data, including configuration and state.

- Kubelet: Kubelet runs on each worker node, ensuring containers run in a pod and are monitored.

- Kube-Proxy: Kube-Proxy facilitates network communication inside and outside the cluster by managing network rules.

Cluster Architecture

Kubernetes clusters operate on a master-worker architecture, allowing efficient resource allocation and load balancing. This architecture supports scalability and high availability. When a worker node fails, Kubernetes automatically redistributes the workloads to other available nodes.

Benefits of Kubernetes Clusters

- Automation: Kubernetes automates tasks such as deployment, scaling, and load balancing, significantly reducing manual efforts.

- Scalability: Clusters can easily scale up or down based on the resource requirements of applications.

- High Availability: Kubernetes ensures applications remain available by reallocating workloads during node failures.

- Resource Management: Efficient management of resources optimizes costs and improves performance.

Kubernetes clusters function as a unified framework for deploying and managing applications in cloud environments, making them crucial for organizations pursuing digital transformation.

Key Components of Kubernetes Clusters

Kubernetes clusters consist of several key components that work together to manage and orchestrate containerized applications. Understanding these components is essential for effective utilization and operation of Kubernetes.

Master Node

The master node orchestrates the control plane of the Kubernetes cluster. It oversees cluster management, scheduling, and the overall state of the system. Core components of the master node include:

- API Server: Acts as the communication hub, handling REST requests and serving as the frontend for the control plane.

- Controller Manager: Monitors the cluster’s state, managing controllers for different tasks such as replication and node management.

- Scheduler: Assigns workloads to worker nodes based on resource availability and predefined criteria, ensuring efficient utilization of resources.

- ETCD: Functions as the cluster’s data store, maintaining configuration data and state information in a reliable key-value format.

Worker Nodes

Worker nodes host containerized applications and execute tasks assigned by the master node. Key components of worker nodes include:

- Kubelet: An agent that runs on each worker node, it ensures that containers are running as expected and reports the node’s status to the master node.

- Kube-Proxy: Manages network communication between containerized applications, facilitating load balancing and service discovery.

- Container Runtime: Responsible for running the containers, common environments include Docker and containerd, which enable packaging and executing applications within containers.

Understanding these components allows teams to effectively manage Kubernetes clusters and optimize deployment strategies in diverse environments.

Benefits of Using Kubernetes Clusters

Kubernetes clusters offer numerous advantages that enhance application management and deployment in cloud-native environments. These benefits include scalability, high availability, and efficient resource management.

Scalability

Scalability is a key benefit of Kubernetes clusters. Applications can scale up or down based on demand without manual intervention. Kubernetes automatically adjusts resources by adding or removing container instances in response to traffic fluctuations. Organizations can configure horizontal pod autoscaling, allowing containers to increase or decrease based on CPU and memory usage. This flexibility supports workload demands, ensuring optimal performance and resource utilization.

High Availability

High availability is crucial for minimizing downtime in production environments. Kubernetes clusters maintain application availability through built-in redundancy and load balancing. When a node fails, Kubernetes automatically redistributes workloads to healthy nodes. The self-healing capabilities of Kubernetes ensure that failed containers are replaced promptly, maintaining service continuity. Organizations benefit from this robust architecture, which reduces the risk of application outages and enhances overall reliability.

Challenges of Managing Kubernetes Clusters

Managing Kubernetes clusters presents several challenges that require careful consideration. Complexity and resource management are two primary obstacles that organizations encounter during deployment and operation.

Complexity

Kubernetes clusters often exhibit high complexity, primarily due to their distributed architecture. Configuring and maintaining multiple components, such as master nodes, worker nodes, and networking elements, can overwhelm teams lacking expertise. The need for intricate configurations increases as applications scale, leading to potential misconfigurations and security vulnerabilities. Upgrading Kubernetes versions introduces additional complexity, as teams must ensure compatibility across all cluster components. Monitoring and troubleshooting issues within a multi-node environment also becomes challenging, necessitating advanced observability tools and practices.

Resource Management

Effective resource management in Kubernetes clusters requires constant attention and optimization. Over-provisioning resources can lead to unnecessary costs, while under-provisioning can compromise application performance. Kubernetes employs resource requests and limits to help teams manage CPU and memory allocations, but determining optimal settings involves thorough analysis and forecasting. Additionally, the dynamic nature of application workloads demands continuous monitoring and adjustment of resource allocations in real-time. Implementing effective autoscaling strategies becomes critical in preventing resource bottlenecks and ensuring application responsiveness during traffic spikes.

Best Practices for Kubernetes Cluster Management

Kubernetes cluster management involves several best practices that enhance stability and security. These practices ensure efficient operations and safeguard against potential vulnerabilities.

Monitoring and Logging

Monitoring and logging are crucial for maintaining the health of Kubernetes clusters. Implement the following strategies:

- Use Robust Tools: Employ tools like Prometheus for monitoring metrics and Grafana for visualization. These tools provide real-time insights into cluster performance.

- Set Up Alerts: Configure alerts for critical metrics, such as resource usage and node health. Alerts enable proactive management and prompt issue resolution.

- Implement Logging: Centralize logging with solutions like ELK Stack or Fluentd. Centralized logging simplifies troubleshooting and helps analyze application behavior.

- Track Changes: Utilize tools like Kubernetes Audit Logs to track changes and actions within the cluster. This practice enhances security and provides accountability in operations.

Security Measures

Implementing security measures is essential for protecting Kubernetes clusters. The following practices enhance cluster security:

- Employ Role-Based Access Control (RBAC): Use RBAC to restrict access to resources based on user roles. This measure reduces the risk of unauthorized access.

- Regularly Update and Patch: Keep Kubernetes and its components up to date with the latest security patches. This practice mitigates vulnerabilities associated with outdated software.

- Conduct Security Audits: Regular security audits identify potential weaknesses in the cluster configuration. Evaluating configurations helps fortify security measures.

- Utilize Network Policies: Implement network policies to restrict communication between pods. This limits exposure to potential threats and enhances cluster security.

These best practices ensure effective management of Kubernetes clusters, enabling organizations to leverage the full potential of containerization while maintaining security and performance.

Kubernetes clusters have become essential for organizations aiming to streamline the management of containerized applications. Their ability to automate processes and ensure high availability significantly enhances operational efficiency. As businesses increasingly transition to cloud-native architectures, mastering Kubernetes is vital for developers and IT professionals.

While the benefits are substantial, navigating the complexities of Kubernetes requires a deep understanding and proactive management strategies. By embracing best practices in monitoring security and resource allocation, teams can mitigate challenges and unlock the full potential of Kubernetes. This positions organizations to thrive in an ever-evolving technological landscape. As Kubernetes continues to shape the future of application deployment and management, its role in driving digital transformation cannot be overstated.